Usually the most popular hardware is a phone or a gaming console that's sold out everywhere, but this year it seems everyone in the tech industry is willing to wait months and spend tons of cash for a product you'll probably never see: Nvidia Corp.'s H100 Artificial Intelligence Smart accelerator.

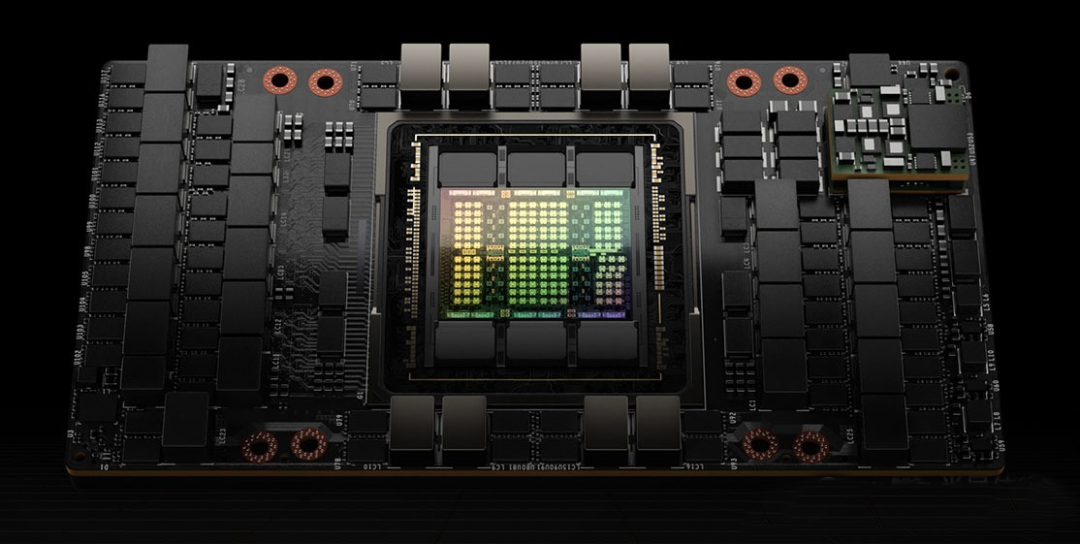

Nvidia's chips can be said to have become the most critical technology in promoting the prosperity of artificial intelligence. With 80 billion transistors, the H100 is the workhorse of choice for training large language models that power applications like OpenAI's ChatGPT and has helped Nvidia dominate the AI chip market.

But with the desire for the H100 so strong and rivals Advanced Micro Devices Inc. Much funding. An H100 currently sells for $57,000 in hardware vendor CDW's online store, and their data centers are filled with thousands of H100s.

When Nvidia CEO Jensen Huang shipped the company's first AI server equipped with an older-generation graphics processing unit to OpenAI in 2016, few could have predicted that such chips would be used in the upcoming ChatGPT-fueled revolution. play a role in the revolution. At the time, Nvidia's graphics cards were synonymous with video games, not machine learning. But Huang recognized early on that their unique architecture, which excels in so-called parallel computing, is better suited to handling the large-scale simultaneous data processing required by artificial intelligence models than traditional computer processors from companies like Intel.

To support OpenAI's efforts, investor Microsoft ended up building a supercomputer with about 20,000 Nvidia A100 GPUs (the predecessor to the H100). Amazon.com Inc., Alphabet Inc.'s Google, Oracle Corp. and Meta Platforms Inc. soon placed equally large orders for the H100 to build out their cloud infrastructure and data centers, which Huang now calls "Artificial Intelligence Factory". Chinese companies are even racing to stock up on weaker versions of Nvidia GPUs, whose performance is limited due to U.S. export controls on semiconductors. Chip delivery times could extend by more than six months. This spring, Elon Musk joked: "GPUs are harder to get than drugs right now."

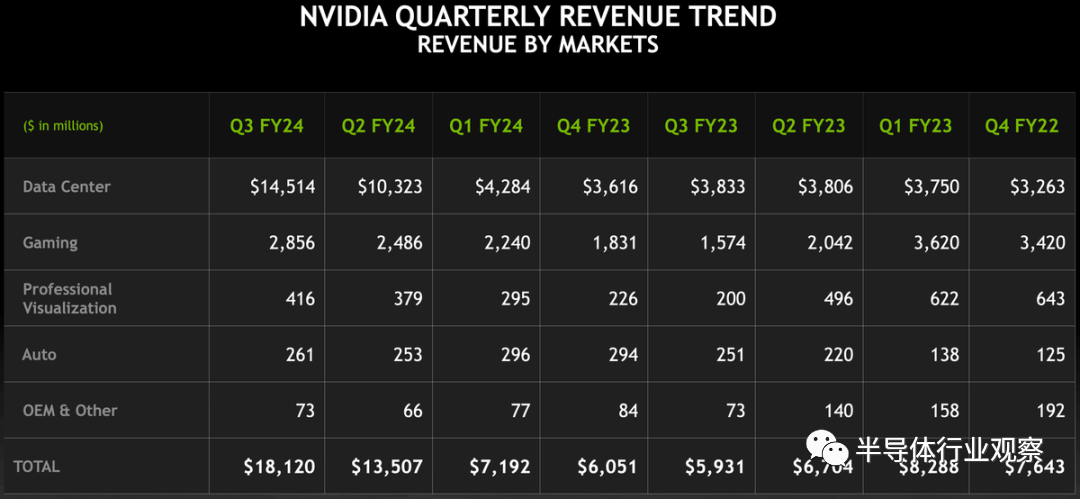

Complaints aside, the blockbuster product line jumped Nvidia's valuation to over $1 trillion and sent its revenue soaring. In the most recent quarter, its data center unit generated sales of $14.5 billion, nearly four times what it was in the same period last year.

But the GPU bottleneck has also made the industry aware of the risks of relying on a single entity for such an important component of the AI portfolio. Google has invested heavily in its in-house TPU chips to reduce costs and improve performance, while Amazon and Microsoft have recently demonstrated their own custom AI accelerators. Meanwhile, Intel is promoting its Gaudi 2 processor as a replacement for the H100, and AMD says its new MI300 will unlock the $400 billion market for artificial intelligence chips in the coming years.

For some tech giants, turning to home-grown chips could create an awkward frenemy situation if their products become popular. On the one hand, Amazon and Google don't want to become overly reliant on Nvidia, but they also don't want to damage their relationship with the world's most valuable chipmaker and potentially jeopardize future access to the latest and greatest GPUs. Huang told Bloomberg earlier this year that he doesn't mind if his biggest customers also become his competitors and that he won't treat them differently.

Regardless, it's unclear whether this new AI chip competition will significantly erode Nvidia's lead in 2024. Last month, Nvidia announced an upgrade to its AI processor, dubbed the H200, which it said would launch in 2024. In the second quarter of next year, Amazon and Google will become the first customers.

GPUs are sold out, far more than CPUs

Accelerators, primarily used for artificial intelligence applications, drove the server semiconductor and component market to grow 29% year-over-year in the third quarter of 2023, Dell'Oro said, with accelerator revenue expected to be double CPU revenue this year.

"The server and storage system components market is expected to grow 11% for all of 2023, driven primarily by accelerators. Excluding accelerators, revenue is expected to decline 27% as system vendors and Inventory adjustments by hyperscale cloud providers targeting general-purpose computing have led to reduced demand.

"Looking ahead to 2024, we expect strong double-digit accelerator revenue growth. Additionally, our forecasts indicate that as vendors increase inventory in anticipation of healthier demand for servers, including CPUs, memory, storage drives and network interface cards (NICs) "The market will recover across the board across categories. As unit volumes grow in these component categories, we expect prices to increase with increased demand and the transition to next-generation server platforms," Fung explained.

NVIDIA led in server and storage system component revenue in Q3 2023, driven by GPU accelerators, followed by Intel and Samsung. Accelerator revenue is expected to surpass CPU revenue for the first time in 2023, reflecting the shift toward accelerated computing.

NIC shipments declined briefly in the first half of 2023, but returned to growth in the third quarter of 2023 due to increased adoption of high-speed ports and smart NICs, especially for accelerated computing.

GPU revenue is expected to grow 70% in 2024. While NVIDIA currently dominates the market, new competing products from AMD and Intel, as well as the emergence of custom accelerators from hyperscale cloud service providers, pose potential challenges.

NVIDIA leaps to No. 1 in semiconductors

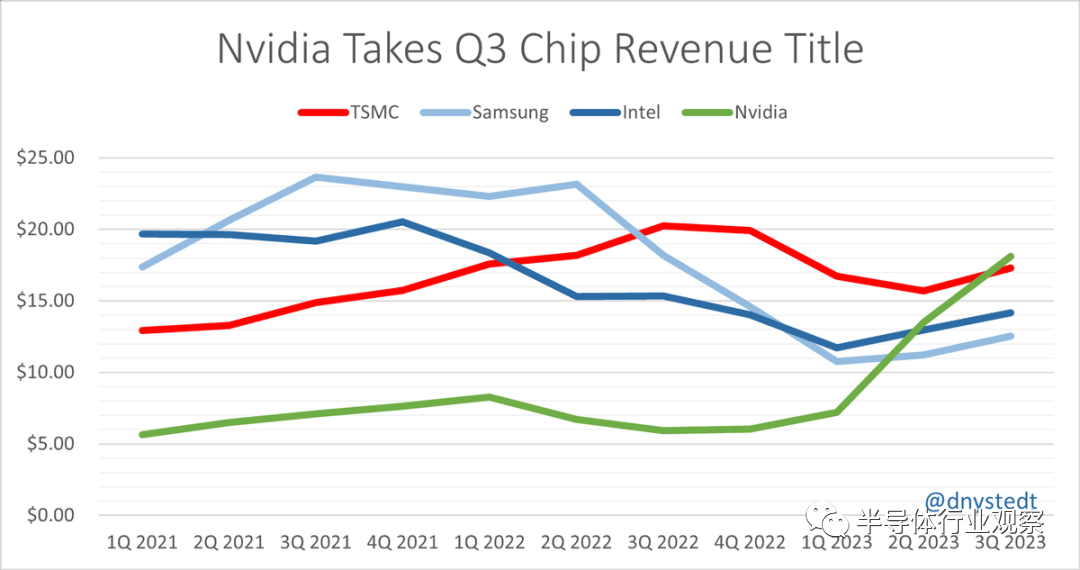

Nvidia jumped from fourth place to first place in a published chip industry revenue assessment today. Dan Nystedt, a financial analyst in Taipei, pointed out that Nvidia took away the revenue championship from chip manufacturing giant TSMC when the third-quarter financial report was released.

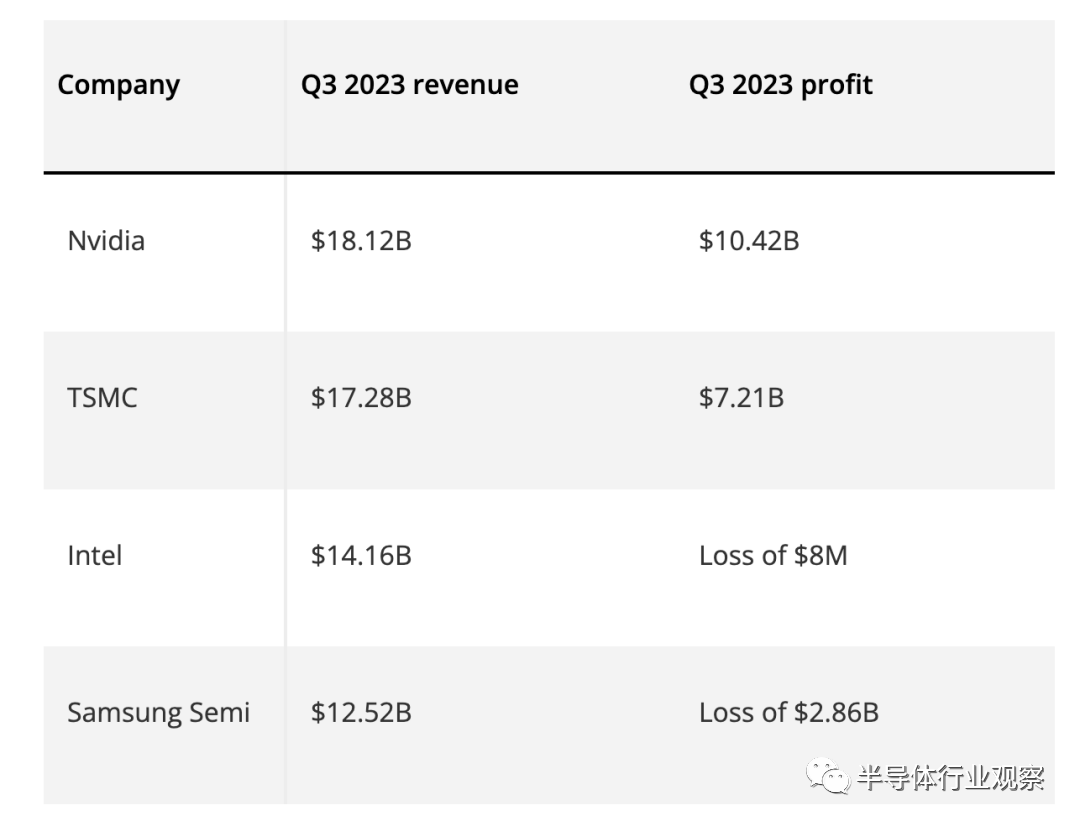

Those paying attention to the world of investing and finance will have seen our coverage of Nvidia's earnings surge, confirmed by the company's release of fiscal 2023 third-quarter results. NVIDIA showed amazing performance, with quarterly revenue reaching $18.12 billion, a year-on-year increase of 206%. The company's profits have also risen, and a chart published by Nystedt shows that in the third quarter of 2023, according to this indicator, Nvidia also surpassed its competitors in the chip industry.

NVIDIA's progress is supported by multiple highly successful operating segments that have a multiplier effect on its revenue and profits. Likewise, we saw clear evidence of a dramatic shift in revenue with the latest financial report shared with investors earlier this week.

While chip industry rivals like TSMC, Samsung, and Intel all enjoy some degree of progress in 2023 (both in terms of revenue and profits), you can clearly see the extreme thirst for having multiple business units supplying a wide range of The artificial intelligence market of technology brings the golden effect.

Nvidia's data center business is its rising star, although we've seen impressive growth across most of its businesses since last year. It has placed increasing demands on TSMC to make chips used to accelerate artificial intelligence data centers. However, due to its valuable intellectual property, Nvidia's revenue and profit per chip will be more substantial than TSMC's revenue per chip on the production side. We are seeing Nvidia having a great time right now, but maybe TSMC will come back and as the old fable tells us "slow and slow wins the race".

It's worth noting that this total revenue figure includes Nvidia's other corporate services, such as software licensing. Samsung was also hurt by a sharp decline in the storage market, which highlighted the multiple factors affecting total revenue value.

It will be interesting to look back a year from now on the relative performance of these four prominent chip industry competitors.